Raft: Consensus made simple(r)

Consensus is one of the fundamental problems in distributed systems. We want

clients to perceive our system as a single coherent unit, but at the same time

we don’t want to have a single point of failure. We need to have several

machines collaborating in a way that they can all agree on the state of the

world, even though a lot of things can go wrong. Nodes can crash, messages can

be delivered out of order or not be delivered at all, and different nodes can

have a different idea of what the world looks like. Making a distributed system

behave like a coherent unit in face of these failures can be a challenge, and

that’s why we sometimes need a consensus algorithm, like Raft, that gives us

some guarantees about the properties that we can expect of this system.

What is Raft

Raft is a consensus algorithm that was created with the goal of being

understandable. This is a direct response to Paxos, which is probably the most

well-known algorithm in this space. Paxos solves the same type of problem, but

it’s a fairly complicated algorithm, and Raft promises to give us the same

guarantees, while being a lot simpler.

It’s currently used in several large scale system, like Consul, etcd and InfluxDB, so it’s pretty mature and battle-tested.

How it works

Raft works by keeping a replicated log. This log is an append-only data

structure where new entries are added, and only a single server, the leader, is

responsible for managing this log. Every write request is sent to the leader

node, and this node will distribute it to the follower nodes and make sure the

client receives a confirmation for this write just when the data is safely

stored. Let’s get into the details.

The consensus problem is divided into three sub-problems: Leader election, Replication and Safety.

Leader election

Every node will always be in one of these three states: Leader, Follower or

Candidate, and we should never have more than one leader at the same time. Time

in Raft is divided into terms, which is basically an arbitrary period of time,

identified by a number that is sequentially incremented.

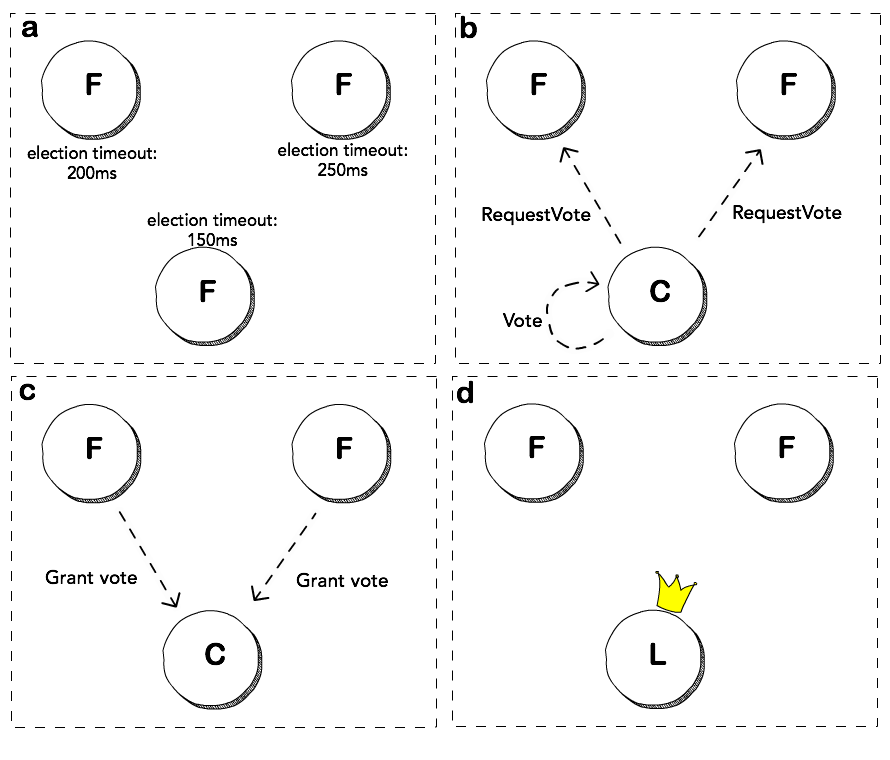

A server always starts as a follower, and it expects a heartbeat from the

leader. The follower will wait for this heartbeat for some time (defined as the

election timeout), and if it does not receive it, it will assume the leader is

dead and transition to the Candidate state. After it goes to this state, the

first thing it will do is to vote for itself, and then send a vote request to

all the other nodes (this request is an RPC called RequestVote). If it receives

a confirmation for this request from the majority of the nodes in this cluster

(e.g. 3 out of 5), it transitions to the Leader state.

There are some interesting things that can happen here, though, and it’s where

Raft’s focus on understandability becomes apparent.

First, if all nodes start at the same time, they would all also timeout at the

same time, meaning every node would trigger this same RequestVote RPC, making

it a lot harder for a single node to obtain the majority of the votes. Raft

mitigates this issue by using a randomized election timeout for each node,

meaning one of the followers will usually timeout before the others, likely

becoming the new leader.

Even having this randomized timeout, we can still have a split vote situation, where none of the nodes have the majority of the votes. For example, in a cluster of 5 nodes when the leader dies we would end up with 4 nodes, and if 2 of these nodes timeout roughly at the same time, each one could get 2 votes, so none of them can become the leader. The solution is as simple as it can be: Just wait for another timeout, that will most likely solve the issue. When this timeout happens and the term doesn’t have a leader, a new term will be initiated, and each node will have a new random timeout value for the next election, that is probably not the same again. We will have a performance penalty because of that, but this timeout is usually just a few milliseconds, and a split vote situation should be quite rare.

Log Replication

This is the part that we really care about: How to keep this replicated log.

After we have an elected leader, every request is sent to this node. If a

follower node receives a request it can just redirect it to the leader or return

an error to the client, indicating which node is the leader.

When the leader receives a request, it first appends it to its log, and then send

a request to every follower so they can do the same thing. This RPC is called

AppendEntries. Although the message was appended to the log, it was not

committed yet, and the client didn’t get a confirmation that the operation

succeeded. Just after the leader gets a confirmation from the majority of the

nodes it can actually commit the message, knowing it’s safely stored, and then

respond to the client. When the followers receive the next heartbeat message

(that is just an empty AppendEntries RPC) they know they can also commit this

message.

Other than the command sent by the client, each log entry also has a term number and an index. The term just defines a unit of time (and, remember, each term has no more than one leader), and the index is the position in the log. Let’s understand why recording these two values is important.

Safety

To ensure that every log is correctly replicated and that commands are executed in the same order, some safety mechanisms are necessary.

The Log Matching Property

Raft maintains the Log Matching Property property, that says that if two

distinct log entries have the same term number and the same index, then they

will:

- Store the exact same command;

- Be identical in all the preceding entries.

As the leader will never create more than one entry with the same index in the same term, the first property is fulfilled

The second property, guaranteeing that all the preceding entries are identical,

is achieved by a consistency check that the followers perform when they receive

an AppendEntries RPC.

It works like this: The leader keeps track of the highest index that is

committed in its log, and send that information in every AppendEntries RPC

(even heartbeats). If the follower does not find an entry with that index in

its local log, it will reject the request, so if the AppendEntries RPC returns

successfully, the leader knows that its log and the follower’s are identical.

When the nodes are operating normally, these logs will always be consistent.

When a leader crashes, though, this log can be left inconsistent, and that’s

when AppendEntries’s consistency check will help us. Imagine this scenario:

- We have three nodes,

N1,N2andN3,N1being the leader; N1replicates the messagesterm=1; index=1; command=xandterm=1; index=2; command=ywithN2, butN3never gets these messages;- Now

N1crashes andN2becomes the new leader; - If

N2tries to replicate the messageterm=2; index=3; command=ztoN3, the consistency check will reject this message, as the highest committed index (3) is not present inN3’s log; N2will then go back in the log and transmit all the entries after the latest entry present inN3, making the logs consistent again.

Election Restriction

This property guarantees that a candidate will never win the leader election if

it does not have all the committed entries in its own log. As an entry needs to

be present in the majority of the nodes to be considered committed, when an

election is taking place at least one node will have the latest committed entry.

If a follower node receives a RequestVote RPC from a candidate that is behind

in the log (meaning a smaller term number, or same term number but smaller

index), it will not grant its vote to this candidate.

In the example above we have three logs, each entry represented with the term

number in which it was created.

In this case, Node 1 was the leader, and was able to commit up to index 5,

where it got a confirmation from the majority of the nodes (itself and Node

2). If Node 1 dies and a new election starts, maybe Node 3 can be the first

to transition to the Candidate state and try to become the leader. This would be

a problem, as its log does not have the latest committed entry (term 3, index

5). When it sends a RequestVote to Node 2, this node will notice that its

own log is more up to date than Node 3’s, and therefore will not grant its

vote, making it impossible for Node 3 to become the leader.

Summary

Raftis divided into 3 parts: Leader election, log replication and safety;- A node can be in one of these three states: Follower, Candidate or Leader;

- Every node starts as a Follower, and after an election timeout transitions to the candidate state;

- A Candidate will vote for itself and send

RequestVoteRPCs to all the other nodes; - If it gets votes from the majority of the nodes, it becomes the new Leader;

- The leader is the only node responsible for managing the log, followers just add new entries to their logs in response to the leader

AppendEntriesRPC; - When the leader receives a command from the client, it first saves this uncommitted message, then sends it to every follower;

- When it gets a successful response from the majority of nodes, the command is committed and the client gets a confirmation;

- In the next

AppendEntriesRPC sent to the follower (that can be a new entry or just a heartbeat), the follower also commits the message; - The

AppendEntriesRPC implements a consistency check, to guarantee its local log is consistent with the leader’s; - A follower will just grant its vote to a candidate that has a log at least as up to date as its own;

Other resources

There are a lot of details left out in this post, so I really encourage you to check out the entire Raft paper, which is quite readable. There’s also a Raft website with a lot of resources, including implementations in several different languages. This Raft visualization also helps a lot to understand how the leader election and replication works, going step by step and explaining everything that is happening, even though it will not cover every scenario described in the paper.

And this is a great lecture by one of the authors:

Interested in learning Kubernetes?

I just published a new book called Kubernetes in Practice, you can use the discount code blog to get 10% off.